ACID, Isolation Levels, and MVCC: Architecture and Execution in Relational Databases

Picture ordering a book from an online store. You add it to your cart, enter payment details, and click "buy." The store removes the book from stock and charges your card. If the system crashes mid-process, you might pay without receiving the order, or the book could stay listed as available despite being sold. Database transactions prevent such issues by ensuring operations complete fully or not at all.

Transactions ensure data reliability in applications like e-commerce or social media, keeping information consistent despite concurrent users or hardware failures. By leveraging ACID properties, isolation levels, and Multiversion Concurrency Control (MVCC), databases avoid corruption, manage simultaneous updates, and maintain performance. For example, proper isolation prevents duplicate orders, while MVCC allows systems like PostgreSQL to handle high traffic efficiently. These mechanisms are essential for building apps users trust.

ACID Properties: The Foundation of Reliability

Database transactions rely on ACID properties to ensure data integrity and reliability, even under failure or concurrent operations. These properties—Atomicity, Consistency, Isolation, and Durability—form the cornerstone of robust database systems. Each addresses a specific aspect of transaction reliability, from guaranteeing complete execution to protecting against system crashes.

Atomicity ensures a transaction is treated as a single, indivisible unit. Either all operations complete successfully, or none are applied. Imagine an e-commerce order for a book (ID: 123) costing $20. The system must deduct one book from stock (from 5 to 4) and record a payment of $20 for user ID 456. If the system crashes after updating stock but before recording payment, atomicity triggers a rollback, undoing the stock change to prevent an inconsistent state.

Consistency guarantees that a transaction brings the database from one valid state to another, adhering to defined rules like foreign key constraints. For example, attempting to delete a product referenced by an active order violates a foreign key constraint, and the database rejects the operation to preserve relational integrity.

Isolation ensures transactions do not interfere with each other, even when executed concurrently. Partial changes from one transaction remain invisible to others until committed. For instance, if two transactions attempt to update the same data simultaneously, isolation prevents conflicts by controlling visibility of uncommitted changes.

Durability guarantees that once a transaction is committed, its changes are permanently saved, even if the system crashes immediately after. This is achieved through Write-Ahead Logging (WAL), where changes are logged to disk before being applied, ensuring committed data persists despite failures.

These properties collectively ensure transactions are reliable. However, trade-offs exist: strict ACID compliance, as in relational databases like PostgreSQL, can impact performance in high-throughput systems, unlike some NoSQL databases that relax consistency for speed.

BEGIN; UPDATE products SET stock = stock - 1 WHERE product_id = 123 AND stock > 0; INSERT INTO orders (user_id, product_id, amount) VALUES (456, 123, 20); COMMIT;

Deep Dive into Write-Ahead Logging (WAL): Ensuring Durability

Write-Ahead Logging (WAL) is a core mechanism in databases like PostgreSQL that guarantees durability by recording changes to a log before applying them to data files. This "write-ahead" approach ensures committed transactions survive crashes, as the log can be replayed to restore the database state. Key concepts include:

WAL (Write-Ahead Log): A sequential log file capturing all database modifications before they hit the main data pages.

LSN (Log Sequence Number): A unique 64-bit identifier for each WAL record, representing the byte offset and order of changes.

Page LSN: The LSN of the last modification stored in each data page's header, used to determine if a WAL record needs reapplication.

Checkpoint: A periodic process that flushes dirty data pages to disk and records a safe recovery point in pg_control, minimizing WAL replay during restarts.

XID (Transaction ID): A unique identifier for each transaction, tagging all related WAL records.

To illustrate WAL in action, consider a scenario with a transaction performing two large UPDATE operations on a table, each affecting 200,000 rows. The transaction commits, but a power failure occurs before data pages are flushed to disk.

BEGIN; UPDATE data SET val = val + 1 WHERE id <= 200000; UPDATE data SET val = val + 1 WHERE id > 200000; COMMIT;

Step 1: Transaction Execution

BEGIN: The transaction receives an XID, e.g., 5001. No WAL records yet, as no data has changed.

First UPDATE (200,000 rows):

Changes occur in shared buffers (memory): New row versions are created (via MVCC), old versions remain.

For each modified page:

A WAL record is generated, including XID=5001, page ID/offset, and change details (delta or full page if first post-checkpoint modification, to prevent partial writes).

LSNs are assigned, e.g., starting from 105000.

WAL records buffer in memory before periodic flushes.

Second UPDATE (200,000 rows): Similar process, generating additional WAL records with increasing LSNs.

Step 2: COMMIT

A COMMIT record (XLOG_XACT_COMMIT) is written to WAL with XID=5001 and LSN=106501.

fsync() ensures WAL up to this LSN is flushed to disk, guaranteeing durability.

Data pages remain dirty in memory (not yet flushed by bgwriter or checkpoint).

The COMMIT also updates the Commit Log (CLOG) to mark XID=5001 as committed.

Step 3: Power Failure

WAL (including COMMIT) is safely on disk.

Dirty data pages in memory are lost.

Step 4: Database Restart and Recovery

Read pg_control: Identifies the last checkpoint LSN, e.g., 104000. All data up to this point is on disk; recovery starts from here.

REDO Phase: Reads all WAL records from the checkpoint LSN forward, applying changes only when needed (regardless of transaction status, to restore physical page consistency):

For each WAL record (UPDATE1, UPDATE2, COMMIT):

Compare page LSN with WAL LSN: If page LSN < WAL LSN, apply the record to the buffer (later flushed to disk). If page LSN ≥ WAL LSN, skip it, as the change is already on disk.

Full page writes (if enabled) simplify this by copying entire pages.

COMMIT record updates CLOG: Marks XID=5001 as committed, making changes visible.

Handling Uncommitted Transactions: If a transaction lacks a COMMIT record, its XID is marked aborted in CLOG. REDO still applies its WAL records (for page consistency), but MVCC hides the tuples (xmin/xmax invalidate them). Autovacuum later cleans dead tuples.

Cleanup Phase: Releases locks, aborts open transactions, and performs any needed vacuuming.

Result: The 400,000 updated rows are restored from WAL, ensuring the committed transaction's effects persist.

WAL integrates with MVCC for efficient recovery without traditional UNDO logs. While it adds overhead (especially for large updates), optimizations like parallel recovery (PostgreSQL 9.6+) mitigate this. In ACID terms, WAL is pivotal for Durability, enabling reliable systems even in failure-prone environments.

Isolation Levels: Balancing Correctness and Performance

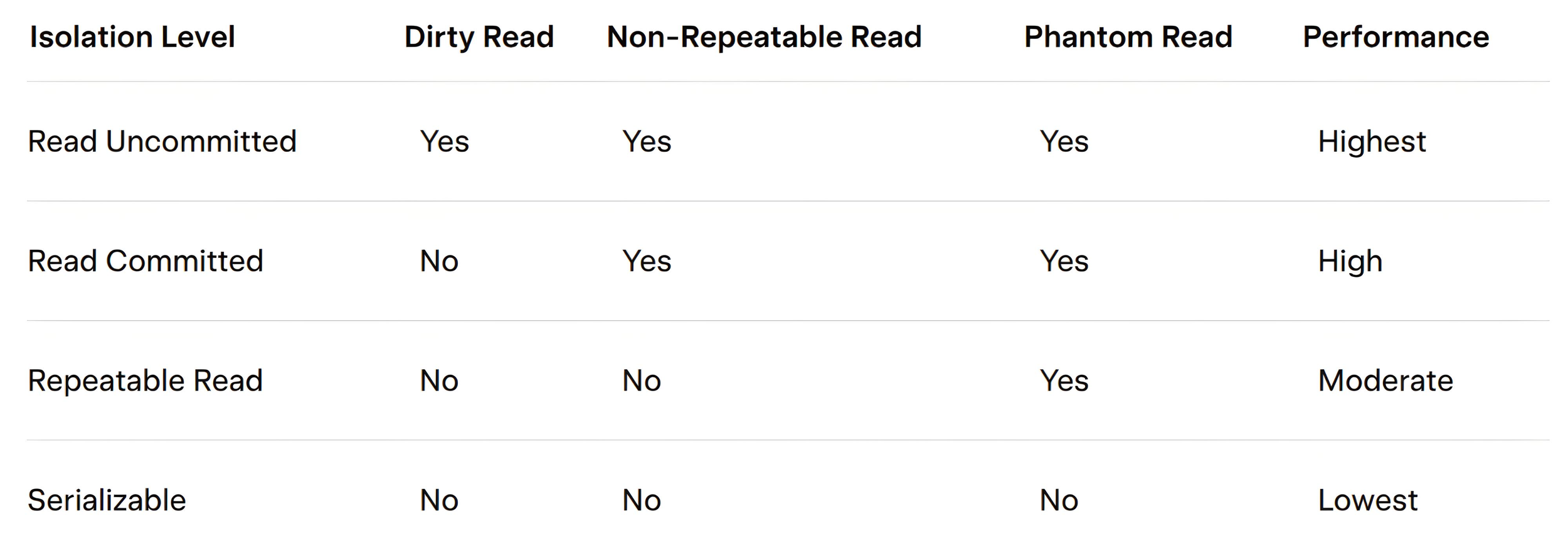

Isolation, a core ACID property, ensures transactions do not interfere with each other when executed concurrently. The ANSI SQL standard defines four isolation levels—Read Uncommitted, Read Committed, Repeatable Read, and Serializable—each balancing data consistency with performance. Higher levels prevent more anomalies but increase resource usage, while lower levels prioritize speed at the cost of potential issues.

Read Uncommitted allows transactions to read uncommitted changes from others, causing dirty reads, where a transaction sees uncommitted data that may later be rolled back. This level maximizes performance but risks inconsistent data and is rarely used.

Read Committed ensures transactions see only committed data, avoiding dirty reads. However, it permits non-repeatable reads, where data read earlier in a transaction changes due to another transaction’s commit, leading to potential inconsistencies.

Repeatable Read prevents non-repeatable reads by locking or snapshotting read data, ensuring it remains unchanged within the transaction. It still allows phantom reads, where new rows appear or disappear in a query’s result set due to another transaction’s changes.

Serializable provides complete isolation, as if transactions execute sequentially, eliminating all anomalies, including phantom reads. It uses additional checks or locks, reducing performance in high-concurrency systems.

Example: table row {id: 123, state: 1, val: 5}

-- Session 1

BEGIN;

SELECT * FROM products WHERE state = 1; -- Returns {id: 123, state: 1, val: 5}

-- Session 2

BEGIN;

INSERT INTO products (id, state, val) VALUES (124, 1, 3);

UPDATE products SET val = 4 WHERE id = 123;

COMMIT;

-- Session 1

SELECT * FROM products WHERE state = 1; -- Result depends on isolation level

COMMIT;

Read Uncommitted: Second SELECT shows

> {id: 123, state: 1, val: 4}, {id: 124, state: 1, val: 3}

even if Session 2 hasn’t committed. Both Non-Repeatable Read (val changes from 5 to 4) and Phantom Read (new row id: 124 appears) occur.

Read Committed: Second SELECT shows

> {id: 123, state: 1, val: 4}, {id: 124, state: 1, val: 3}

after Session 2 commits. Both Non-Repeatable Read (val changes) and Phantom Read (new row appears) occur.

Repeatable Read: Second SELECT shows

> {id: 123, state: 1, val: 5}, {id: 124, state: 1, val: 3}

Only Phantom Read occurs (new row appears); Non-Repeatable Read is prevented, as val stays 5 due to MVCC snapshot.

Serializable: Second SELECT shows

> {id: 123, state: 1, val: 5}

Neither Non-Repeatable Read nor Phantom Read occurs, ensuring sequential consistency.

Deadlocks in Transactions

Deadlocks arise when two or more transactions mutually wait for resources held by each other, creating a cycle that halts progress. In databases, this typically involves row locks, where one transaction locks a row needed by another, and vice versa.

Deadlocks happen in concurrent environments with shared resources. They are more frequent at higher isolation levels like Repeatable Read or Serializable, where locks protect against anomalies.

For example, two transactions selling products can deadlock:

Transaction 1 locks product ID: 123 to update stock, then waits for ID: 124.

Transaction 2 locks product ID: 124, then waits for ID: 123.

This forms a cycle: neither can proceed.

Databases detect deadlocks via algorithms checking for cycles in wait graphs (e.g., PostgreSQL scans pg_locks periodically). Upon detection, the DB resolves by aborting one transaction (usually the younger or lower-cost one), rolling it back, and releasing its locks. The aborted transaction gets an error like "deadlock detected" and can retry.

-- Session 1 BEGIN; UPDATE products SET stock = stock - 1 WHERE product_id = 123; -- Locks 123 -- Pause UPDATE products SET stock = stock - 1 WHERE product_id = 124; -- Waits for 124 -- Session 2 BEGIN; UPDATE products SET stock = stock - 1 WHERE product_id = 124; -- Locks 124 UPDATE products SET stock = stock - 1 WHERE product_id = 123; -- Waits for 123, deadlock -- DB aborts one (e.g., Session 2) with "deadlock detected"

MVCC: Multiversion Concurrency Control in Action

MVCC (Multiversion Concurrency Control) lets databases like PostgreSQL handle many users reading and writing data at once without slowing down. Instead of locking rows, it keeps multiple versions of data, so each user sees a consistent snapshot of the database. This cuts delays and boosts speed for busy systems.

Every change in the database gets a transaction ID (XID), a unique number tracking who did what. Rows store fields like xmin (who created it) and xmax (who outdated it). The cmin/cmax fields track smaller steps inside a transaction, like the order of updates or nested blocks. These fields decide which row version is visible.

A snapshot captures the database state when a transaction starts, listing active and completed transactions. It checks if a row’s creator (xmin) is valid and if it’s not outdated (xmax). In Read Committed, snapshots update with each query to show new changes. In Repeatable Read, the snapshot stays fixed, keeping reads steady.

When a row is updated, MVCC makes a new version and marks the old one dead. These dead rows stick around until no user needs them, but they bloat tables, slowing queries. Vacuum cleans up by removing dead rows that no transaction can see, like a cleanup crew checking if data is still in use. This works like a counter: if no active transaction references a row version, it’s deleted. Long-running transactions can delay this, so tuning vacuum is key.

MVCC speeds up systems by avoiding locks but needs storage for versions and cleanup effort. It supports isolation levels, ensuring stable reads or strict consistency when needed.

-- Session 1: Repeatable Read SET TRANSACTION ISOLATION LEVEL REPEATABLE READ; BEGIN; SELECT stock FROM products WHERE product_id = 123; -- Shows 5 -- Session 2 BEGIN; UPDATE products SET stock = 4; -- New version, old one marked dead COMMIT; -- Session 1 SELECT stock FROM products WHERE product_id = 123; -- Still 5 COMMIT;

Vacuum later removes the dead version when no transaction needs it. MVCC keeps things fast but needs regular cleanup to avoid bloat.

Vacuum: PostgreSQL's Maintenance Tool

Vacuum is PostgreSQL’s built-in maintenance command that reclaims storage, updates statistics, and prevents issues like transaction ID overflow. It keeps databases efficient by cleaning up unused space, improving query performance, and ensuring long-term stability.

In the context of Multiversion Concurrency Control (MVCC), Vacuum focuses on dead tuples—outdated row versions created during updates or deletes. For example, updating a product’s stock (ID: 123) from 5 to 4 leaves a dead tuple that bloats the table. Vacuum scans for tuples invisible to active transactions (based on snapshots) and marks their space reusable in the Free Space Map (FSM), reducing bloat without shrinking the table file.

In a broader sense, Vacuum does more: it updates query planner statistics (with ANALYZE) for better plans, cleans index references to dead tuples, and freezes old transaction IDs to avoid XID wraparound (a limit at ~2 billion). Autovacuum runs this automatically (e.g., at 20% dead rows). VACUUM FULL rebuilds tables for defragmentation, compacting space and returning it to the OS, but locks the table. Bloat from dead tuples can double table size (e.g., 50 MB to 100 MB), slowing I/O; monitoring with pgstattuple helps catch it early.

Example in SQL

-- Bloat from updates

UPDATE products SET stock = stock - 1 WHERE product_id = 123; -- Adds dead tuple

-- Check bloat

SELECT * FROM pgstattuple('products'); -- ~40% dead space

-- Vacuum

VACUUM ANALYZE products; -- Cleans tuples, updates stats

-- After

SELECT * FROM pgstattuple('products'); -- Dead space near 0%

Vacuum maintains database health, but tuning autovacuum prevents unchecked bloat.

Practical Advice and Conclusion

Understanding transactions helps build reliable systems. Here’s how to apply the concepts effectively:

Choose isolation levels based on needs: Start with Read Committed for most cases, adjusting to Repeatable Read or Serializable if anomalies like phantom reads impact your app.

Tune MVCC for performance: Adjust autovacuum settings (e.g., lower autovacuum_vacuum_scale_factor to 0.1) to control bloat from dead tuples. Monitor with pgstattuple to catch growth early.

Avoid deadlocks: Update rows in a consistent order (e.g., by ID) and keep transactions short to reduce lock conflicts.

Track issues: Check pg_stat_activity for long-running transactions and pg_locks for lock waits to spot problems before they escalate.

Grasping ACID properties, isolation levels, MVCC, and Vacuum unlocks better design and troubleshooting. It ensures data stays intact under pressure and sets the stage for tackling distributed systems, where new challenges like sagas await.